(Original title: If Google Teaches an AI to Draw, Will That Help It Think?)

Netease Technology News June 9th, The Atlantic wrote that when humans first drew pictures on rocks, they realized a huge cognitive leap forward. Nowadays, computers are learning to do the same thing. If Google teaches AI to paint, will it help it think and think like humans?

The following is the main content of the article:

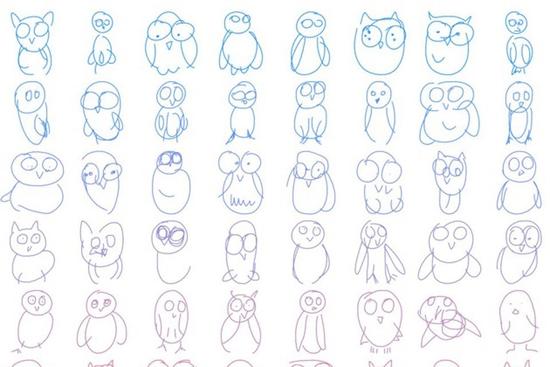

Imagine someone calling you to draw a pig and a truck. You may draw like this:

this is very simple. But then imagine that you are asked to draw a pig truck. As a human being, you intuitively think of how to combine the characteristics of a pig and a truck. Perhaps you would draw the following:

Take a look at the small, curved pig's tail and look at the somewhat round window in the cab, which also reminds you of the eyes. The wheels became hoofed, or the pig's feet became like wheels. If you paint like this, I will think subjectively that this is a very creative interpretation of the “pig truckâ€.

Google's AI Painting System SketchRNN

Until recently, only humans were able to complete this conceptual shift, but now not only humans can do it. The pig truck is actually the work of the highly attractive artificial intelligence system SketchRNN, which is part of Google's new project to explore whether AI can create art. The project is called Project Magenta and is led by Doug Eck.

Last week, I visited Ike in the Google Brain team office in Mountain View. The office is also home to the Magenta project. Ike is very smart and humble. He received his Ph.D. in Computer Science from Indiana University in 2000 and later focused on music and machine learning techniques. He began his career as a professor at the University of Montreal (Artificial Intelligence Hotbed) and later worked at Google. At Google, he initially engaged in the Google Music Music Services project and later moved to the Google Brain team to participate in the Magenta project.

According to Ike, his ambition to create an AI tool for creative arts was initially mere rhetoric. "But after several rounds of repeated thinking, I think, 'We certainly need to do this, it's very important.'"

As he and his colleague David Ha wrote, the significance of SketchRNN is not just to learn how to draw, but rather to “summarize abstract concepts in a human-like manner.†They don’t want to. Create a machine that can draw pigs. They want to build a machine that can recognize and export "pig's characteristics." Even if the machine gets a prompt language, such as a truck, it can still be affected and make accurate judgments.

The implicit view is that when people draw, they will make an abstract concept of the world. They will go to draw the broad concept of "pig" rather than painting specific animals. That is to say, there is a correlation between how our brain stores "pig characteristics" information and how we draw pigs. Learning how to draw a pig, you may learn the ability of the human brain to synthesize the characteristics of the pig.

This is the mode of operation of Google's AI software. Google has developed a game called "Quick, Draw!" that generates a variety of human drawings (such as pigs, rain, fire trucks, yoga moves, gardens, etc.) when people play the game. Great database of owls).

When we painted, we compressed the colorful and bustling world into a few lines or strokes. It is these simple strokes that make up the underlying data set of SketchRNN. With Google's open source TensorFlow software library, every drawing - cat, yoga action, rain - can be used to train a specific neural network. This is different from the kind of photo-based drawing system that has caused widespread media coverage. For example, the machine can render Van Gogh or the original Deep Dream-style photos, or can draw any shape, and then fill it with "cat features." .

These projects make people feel incredible. They are quite interesting because the images they produce appear to come from human observations of the real world, although not exactly.

Something I see with a pictorial expression like a human being

However, the output of SketchRNN is not at all unbelievable. "They feel very real," Ike said. "I don't want to say 'much like human works,' but they feel very real, and those pixel-generation tools are not."

This is the core insight of the Magenta team. “Humanity... does not understand the world as a pixel grid, but develops abstract concepts to represent what we see.†Ike and David Ha wrote in a paper describing their work. “We were able to convey what we saw when we were kids using pencils or crayons to paint on paper.â€

If humans can do this, then Google will want the machine to do the same. Last year, Google CEO Sundar Pichai stated that his company "takes artificial intelligence first." For Google, AI is a natural extension of its initial mission of “organizing information around the world, making it available everywhere, everywhereâ€. What is different now is that information is organized by artificial intelligence and then made available to a wide range of users. Magenta is one of Google's crazy attempts to organize and understand a particular human domain.

Machine learning is the most widely used concept of various tools used by Google. It is a way of programming a computer to teach itself how to perform various tasks. A common way is to inject data into the computer to "train." A popular way to conduct machine learning is to use a neural network modeled on the human brain's connection system. Different nodes (artificial neurons) are connected to each other. They have different weights and respond to some input information but do not respond to other input information.

In recent years, multi-level neural networks have proven to be very successful in solving difficult problems, especially in translation and image recognition/manipulation. Google rebuilt many core services on these new architectures. These neural networks mimic the workings of the human brain, and their interconnected layers can recognize different patterns of input information (such as images). Low-level layers may contain neurons that respond to simple pixel-level patterns of light and darkness. A high-level layer may respond to a dog's face, car, or butterfly.

Building a network with this architecture and mechanism will have incredible results. The originally extremely difficult computational problem became the training of adjusting the model, and then let some graphics processing unit calculate the problem for a while. As Gideon Lewis-Kraus described in The New York Times, Google Translate was a complex system that had been developed for more than 10 years. The company later used a deep learning system to restructure a Google translation system in just 9 months. "The AI ​​system will have a huge improvement overnight, and this increase is equivalent to the total increase in the old system's life cycle," wrote Lewis-Claus.

Because of this, the amount and types of neural networks are growing in spurt. For SketchRNN, they use a recursive neural network that can process input sequences. They train the network with strokes that are drawn continuously as people draw different things.

In the simplest terms, this training is a coding process. After the data (drawing) is entered, the network will try to figure out the general rules of what it is dealing with. Those summaries are data models where data is stored in mathematical calculations that describe the propensity of neurons in the network.

That structure is called a latent space or Z (zed), and is a place where information on the features of a pig or a feature of a truck or a feature of a yoga action is stored. As the AI ​​industry has said, call the system a sample, that is, it draws what it trains, and SketchRNN will draw pigs or trucks or yoga. What it draws is what it learned.

What can SketchRNN learn?

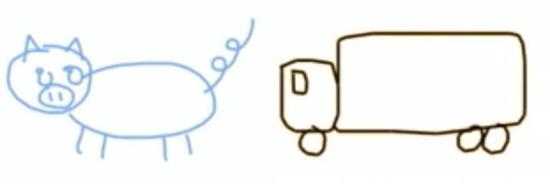

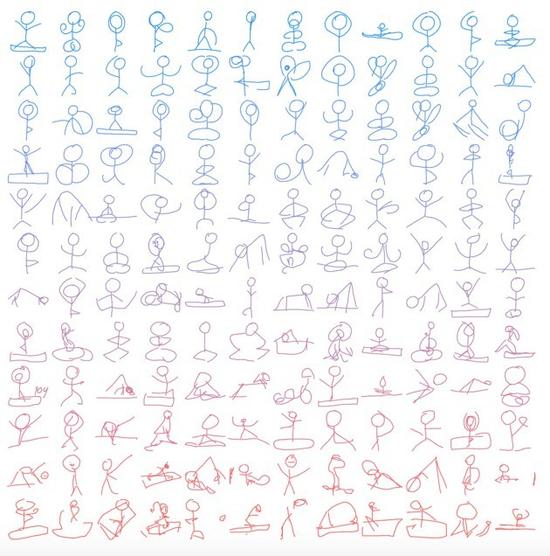

What can SketchRNN learn? The following figure is a new fire truck generated by a neural network that has been trained in fire truck painting. In this model, there is a variable called "Temperature" that allows researchers to adjust the randomness of the output up and down. In the image below, the bluish image is the product of "temperature" downgrading, and the reddish image is the product of "temperature" upregulation.

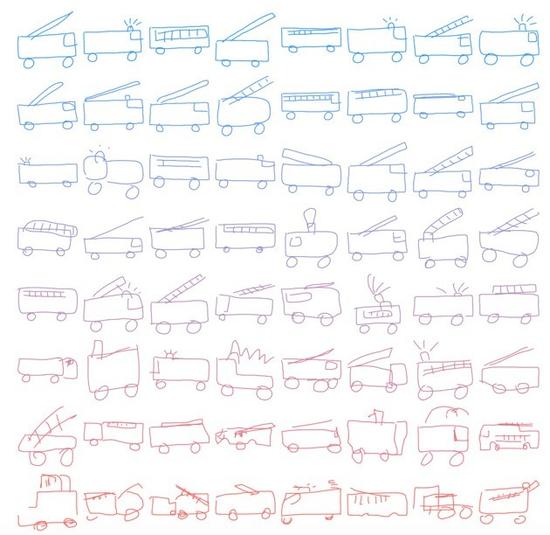

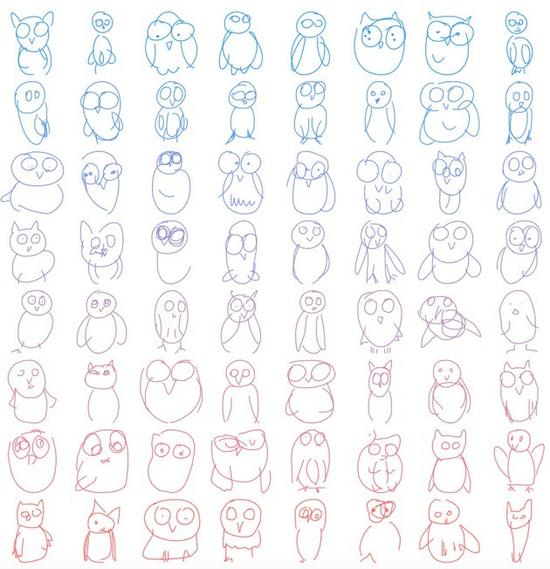

Or, you may prefer owls:

One of the best examples is yoga:

These paintings look like people's works, but they are not painted by the person himself. They are a remake of how humans might draw such things. Some paintings are very good, others are not so good, but if you are playing games with AI, you can easily see what they are.

SketchRNN can also accept input in the form of a manual drawing. You send a drawing and then it tries to understand it. What happens if you enter a three-eye cat drawing for a model that has been trained in cat data?

did you see? Look at the various output images of the model on the right (again, with blue and red to represent different “temperaturesâ€), which eliminates the third eye! why? Because the model has learned through learning, cats have two triangular ears, beards on both sides of the face, round faces, and only two eyes.

Of course, the model has no idea what the ear actually is, does not know if the cat's beard will move, does not even know what the face is, or whether our eyes can transmit images to our brain. It knows nothing about what these drawings refer to.

But it does know how humans represent cats, pigs, yoga moves or sailing boats.

“When we started to create a drawing of a sailing boat, the model would draw hundreds of drawings showing other sailing models based on the drawing that was entered.†Google’s Ike stated, “We can all see what they are painting. Because the model uses all the training data to produce the ideal sailing image. It does not draw a specific sailboat, but draws the characteristics of the sailboat."

Being part of the artificial intelligence movement is an exciting thing. It is one of the most exciting technology projects in history, at least for those involved, and for many others. So - it can even knock down Doug Ike.

I mean training neural networks to paint rainy days. Enter a fluffy cloud drawing and the following will happen:

The rain came down from the cloud you sent to the model. That's because many people draw a cloud when they are painting in the rain, and then paint the rain below. If the neural network sees the cloud, it will draw rain under the shape of that cloud. (Interestingly, the data is about a series of strokes, so if you first paint the rain, then the model won't paint clouds first.)

This can be regarded as a joyous task, but is it an ingenious incidental project in a long-term project on how humans think about implementing reverse engineering, or is it an important puzzle?

Ike thinks that the most appealing aspect of drawing is that they contain so much information with so little meaning. "You draw a smile and you can draw it with just a few strokes," he said. The strokes look completely different from the pixel-based photos of human faces. But a 3-year-old child can also tell the face and tell whether it is happy or not. Ike thinks this is a kind of information compression. For this encoding, SketchRNN will decode it and re-encode it any way it likes.

SketchRNN has limited coverage

OpenAI researcher Andrej Karpathy currently plays an important role in the dissemination of AI research results. He said, “I strongly support the SketchRNN project. It's really cool.†But he also pointed out that they The importance of strokes introduces strong assumptions into their models, which means that their contribution to the entire artificial intelligence development business is relatively insignificant.

"The generative models that we develop usually try to focus on the details of the dataset as comprehensively as possible. No matter what data you inject into them, whether they are images, audio, text, or anything else, they all apply." He said, "In addition to the image In addition, these data do not consist of strokes."

He added: "I'm fully able to accept people's strong assumptions, encode them into models, and achieve more impressive results in their specific areas."

What Ike and David Hah are building is closer to the AI ​​of playing chess than to being able to judge the rules of any game and to play the AI ​​of these games. For Kapasi, the coverage of the current project seems to be limited.

Understand human ways of thinking

However, they feel that line drawing is crucial to understanding human thinking, and it is not without reason. In addition to these two Google personnel, there are other researchers who are attracted by the power of strokes. In 2012, James Hays of Georgia Tech teamed up with Mathias Eitz and Marc Alexa of Berlin Polytechnic University to create a stroke dataset and a A machine learning system that recognizes strokes.

For them, drawing is a form of “universal communication†that is something that all people with standard cognitive functions can do and do. “Since prehistoric times, people have used the drawings of rock paintings or cave paintings to describe the visual world.†They wrote, “The hieroglyphs appear thousands of years earlier than the language, and everyone now draws and recognizes drawings. The object."

They referred to a paper published in the Proceedings of the National Academy of Sciences by Dirk Walther, a neuroscientist at the University of Toronto, who stated that "simple and abstract drawings activate our brain in a manner similar to real stimulants. "Walter and the co-authors of the paper assume that the line drawing "can capture the nature of our natural world." Although looking pixel by pixel, the cat's line drawing does not look like a cat's photo at all.

If we say that the neurons in our brain work within a layered structure simulated by a neural network, then the drawing may be a way to grasp the level at which we store our simplified concept of objects (the "essence" Walter calls). That is to say: They may enable us to truly understand the new ways of thinking that our ancestors adopted when they evolved into modern forms a long time ago. The drawing, either on the walls of the cave or on the back of the paper towel, may describe the evolution from the identification of horses to the identification of the characteristics of horses, from the drawing of daily experiences to the evolution of abstract symbolic thinking, which is also the evolution of humans. The process of becoming a modern form.

Most of human modern life comes from that transformation: language, money, mathematical calculations, and finally computer computing itself. Therefore, if the drawing ultimately plays an important role in the creation of significant artificial intelligence, then it would be best.

However, for humans, drawing is a depiction of real objects. We can easily understand the relationship between the abstract four-line representation and the item itself. This concept has some meaning for us. For SketchRNN, drawing is a series of strokes, a shape that has been formed over time. The task of the machine is to grasp the essence of what our drawings describe and try to use them to understand the world.

The SketchRNN team is exploring several different directions. They may develop a system that attempts to improve the ability of painting through human feedback. They may train the model on more than one drawing. Maybe they will find ways to judge whether the models they have trained in the identification of swine traits in the drawing can be generalized to recognize pig characteristics in photo-level images. I personally very much hope to see their models have access to other models trained on traditional cat maps.

SketchRNN is just the "first step"

But they also frankly said that SketchRNN is only a "first step" and there are many things to learn. The history of human art that these decoding and mapping machines are involved in is quite old.

In an article written for The New Yorker on European cave paintings, Judith Thurman wrote that the Paleolithic arts were "almost unchanged for 25,000 years." There are hardly any innovations or boycotts," she pointed out. It was "four times as long as recorded history."

Computers, especially new artificial intelligence technologies, are shaking the long-standing human concept of what they are good at. Humans lost to machines in checkers in the 1990s, and then lost to machines. In recent years, they lost to AlphaGo in the Go game.

However, the remarkable progress made by AI in recent years is not due to the speed of art development (although it does develop rapidly). For Ike, it is more because they are struggling to study the basic principles of human thinking and who we are. “The true core of art is the basic humanistic qualities, which is the way we usually communicate with each other.†Ike said.

Throughout the deep learning movement, all kinds of people are studying the basic mechanisms of human life—how do we look at things, how do we move around, how do we talk, how do we recognize faces, how do we use stories to compose stories, How we play music - this looks a bit like the outline of human characteristics, not the outline of any particular person.

Now, it has a low resolution and is a true-minded manga that is a true-thinking character line drawing, but it should not be difficult for us to think of gathering information from this drawing. (Lebang)

MT6-Subminiature Dustproof Micro Switch

Features

â—† Designed For Water and Dust Tight(IP67)

â—† Small Compact Sizeâ—† UL&ENEC&CQC Safety Approvals

â—† Long life & high reliability

â—† Variety of Levers

â—† Wide Range of wiring Terminals

â—† Wide used in Automotive Electronics,Appliance and Industrial Control etc.

â—† Customized Designs

Micro Magnetic Switch,Micro Momentary Switch,Sealed Rotary Switch,Subminiature Dustproof Micro Switch

Ningbo Jialin Electronics Co.,Ltd , https://www.donghai-switch.com