At the Computex 2018 media conference held a few days ago, AMD made some unexpected high-specification product releases. The disclosed products include the next generation of VEGA GPUs using 7nm process and Zen 2 processors using 7nm. At present, the 7nm VEGA GPU is the world's first GPU to use the 7nm process. Sample shipments have now begun, and large-scale shipments are expected to begin in the second half of this year. This was much earlier than the previously expected timetable, and it also caught Nvidia by surprise, allowing AMD to grab the "world's first 7nm GPU" at a thunderous pace.

In addition to the GPU, AMD also announced the next generation of the Zen 2 processor EPYC using the 7nm process. The processor has now been taped out and is in laboratory testing. It is expected to enter the engineering sample stage in the second half of 2018 and in 2019. Entered the stage of large-scale shipments.

With the desktop PC market being eroded by mobile devices, the lively Computex conference at the beginning of this century has been almost forgotten. And AMD's high-spec product announcement at the Computex conference this time undoubtedly brought a new breeze to the previously weak computer market and the Computex conference. This also released an important signal: the high-performance computing market with data centers as the main application scenario is taking over the baton of PCs and will become the main driving force for the development of computers in the next decade.

The imaginary space of the data center

With the rapid development of big data and deep learning, data is becoming the crude oil of the new era and computing power is becoming the next-generation infrastructure. AMD pointed out at the press conference that the data will increase by 50 times by 2025: wearable devices, IoT, and 5G devices are becoming popular, and these devices will generate a lot of data. In addition, our processing methods for these data are becoming more and more complex, and new algorithms in the field of machine learning emerge in an endless stream, which can extract more useful information from data, thus introducing revolutions in the fields of smart cities, medical care, finance, and security. Sexual changes. With the rapid increase in data volume and algorithm complexity, the demand for computing power is also growing rapidly.

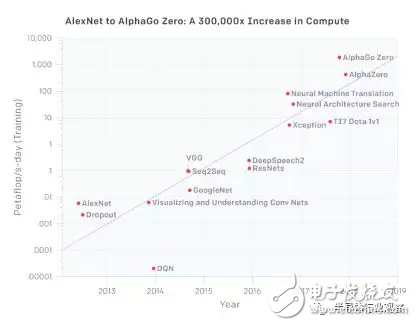

OpenAI announced the deep learning algorithm computing power demand, 6 years increased by 300,000 times

The main infrastructure of big data computing power lies in the data center. The demand for processors in data centers currently mainly includes CPUs and GPUs. The CPU is traditional computing hardware that can support general-purpose computing and is also an indispensable part of the data center. The EPYC CPU announced by AMD this time can support up to 32 cores per socket. In addition to the large number of cores, CPU memory access and communication between CPUs are also important elements in data centers that need to perform distributed computing efficiently. Each CPU of EPYC can support up to 8 memory channels and 128 PCIe channels, which can be described as performance. powerful. As we all know, AMD is not a traditional strong point in the field of data center CPUs. With Intel firmly controlling the market, how AMD enters the data center CPU ecosystem has become one of the main points of attention. At this press conference, AMD announced that EPYC has entered the products of important customers such as CISCO, HP and Tencent Cloud. Although AMD still has a big gap compared to Intel's market share in the data center, this is also a good starting point.

In addition to the CPU, the GPU is the larger part of the data center's imagination. In the era of big data, CPUs cannot efficiently support all operations: On CPU chips, in order to meet the support of general algorithms, a large part of the chip area is used for cache and control logic (such as branch judgment, etc.). The area of ​​the computing unit is not large. In big data algorithms, a large amount of data can be processed in parallel (for example, independent data generated from different devices can be processed in parallel without affecting each other). Therefore, big data algorithms are often relatively regular, and many control logics on the CPU chip It is redundant for big data algorithms. At this time, GPUs that are good at parallel computing and processing stand out. The control logic in the GPU design is relatively simple, and most of the chip area is used as the computing unit. Therefore, a GPU often contains thousands of computing cores, which can provide ultra-efficient parallel computing, and for the execution of the appropriate big data algorithm GPU The speed is two to three orders of magnitude faster than the same generation of CPU.

The landmark event of GPU executing big data algorithms in the data center was the training of the deep learning algorithm AlexNet in 2012. AlexNet is a signature algorithm of deep learning. Its first contribution is to prove that the performance of deep neural networks in image classification tasks is much better than traditional support vector machines (SVM) and other algorithms when the amount of data is sufficient. In addition to this wave of deep learning, AlexNet also proposed the use of GPU to train deep learning networks. Compared with CPU, the training time can be reduced by two to three orders of magnitude to enter a reasonable range (the time has dropped from several years to several day). It can be said that deep learning is inseparable from the support of GPUs, and as deep learning continues to spread, the demand for GPUs in data centers continues to rise.

The popularity of deep learning is a factor in the continuous increase in demand for GPUs in data centers. In addition, other algorithms that require GPUs other than deep learning are also driving GPU demand. It is well known that blockchain algorithms also have a great demand for GPUs. In 2017, where the blockchain and cryptocurrencies are the hottest, the demand for GPUs in major mines even made GPUs sold out (AMD also profited from it. Feng), although cryptocurrencies have gradually returned to rationality, the demand for GPUs has steadily increased. In addition to blockchain, traditional applications such as databases are also gradually embracing GPU acceleration. It can be said that the current demand for GPUs in data centers is led by deep learning, and it is gradually catching up in other fields. Nvidia currently has an almost monopoly in the data center GPU market. Correspondingly, the data center business is becoming more and more important in Nvidia’s financial report. The data center business grew by 245% in fiscal year 2017, and it also increased by 233 in fiscal year 2018. %, revenue is close to 2 billion U.S. dollars. Of course, AMD will not sit idly by the data center market. This time, Nvidia's first release of 7nm VEGA GPU and Radeon Instinct data center accelerator card is also a strong challenge signal to Nvidia.

In addition to hardware, development ecology is equally importantIn the data center market, in fact, major customers such as BAT also hope that AMD can break the monopoly of Nvidia, so that the price of high-performance GPUs can return to a reasonable range. AMD’s 7nm VEGA GPU and Radeon InsTInct accelerator card released this time can be described as powerful. Radeon InsTInct accelerator card uses 32GB HBM high-speed video memory, and VEGA GPU also adds hardware support for artificial intelligence and machine learning. The specific performance is worth looking forward to.

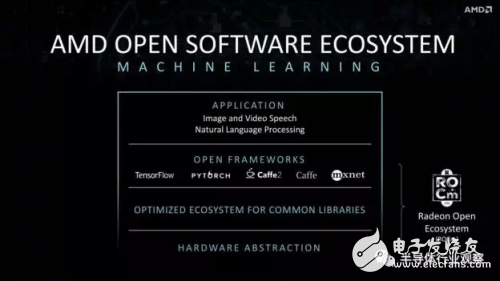

In addition to hardware performance, the developer ecology is also a decisive factor. Nvidia's strategic vision is extremely far-reaching. When most people's understanding of GPUs is still limited to game graphics acceleration, Nvidia has already seen the potential of GPUs in other areas, so it started the GPGPU (General Purpose GPU) strategy and started CUDA. Development. After years of development and accumulation, it has encountered a great craze for deep learning. Nvidia's CUDA has become the first choice of relevant developers with its stable performance, easy-to-use API interface, complete documentation and years of developer community operations. , With its GPU, it has therefore become the standard configuration of the data center. On the other hand, AMD's investment in GPGPU technology has been in a tepid state before, and several other partners such as Qualcomm are promoting OpenCL similar to CUDA, but its performance and ease of use have been criticized by the developer community. In addition, another AMD initiative in the field of GPGPU is the introduction of heterogeneous system architecture HSA (heterogeneous system architecture). The original intention of HSA is to open up the memory space of CPU and GPU to solve the problem of memory exchange between CPU and GPU. Performance loss, but so far HSA can only be said to be ordinary and has not caused too many disturbances.

AMD of course also recognized the problems caused by its insufficient development ecology, so at this press conference it also specifically mentioned its latest GPGPU initiative, the Radeon Open Ecosystem, which can support mainstream machine learning platforms such as TensorFlow, PyTorch, Caffe, and MxNet. Provide optimized library support. However, AMD is still catching up in the field of development ecology, especially when Nvidia's data center ecology has begun to explore blue oceans such as GPU databases. How AMD catches up is worthy of our attention.

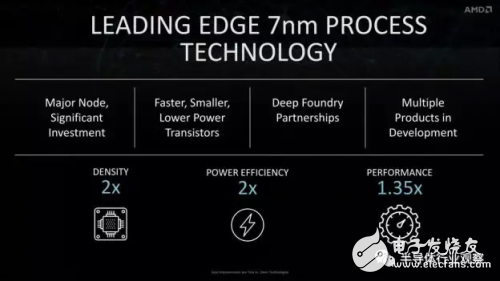

From the chip point of view, this AMD release also allows us to see the trend of semiconductor process development.

AMD released the data of 7nm VEGA GPU. Intriguingly, its performance is only 35% higher than the previous generation of 14nm VEGA. When the feature size is reduced by half and the design is improved, the performance improvement is not large: in the 7nm semiconductor process node, although the feature size is reduced, the switching speed of the transistor will increase, but the delay caused by the metal interconnection line will also increase, so The help for chip performance is limited. On the other hand, its transistor density and power consumption have improved twice as much, which basically continues the momentum of Moore's Law.

In the case that the feature size is of limited help for chip performance improvement, packaging technology will become another thrust for chip performance improvement. At this press conference, one of the most important keywords in the Radeon InsTInct accelerator card released by AMD is 32GB HBM memory. HBM uses advanced packaging technology to make the processor and DRAM in the same package, which can greatly reduce the trace length, increase the trace density and bus width, thereby providing a memory bandwidth far higher than the traditional DDR standard. In fact, the current memory bandwidth has become an important bottleneck preventing the processor from fully exerting its peak computing power, so HBM memory will become an important technology for processor performance improvement.

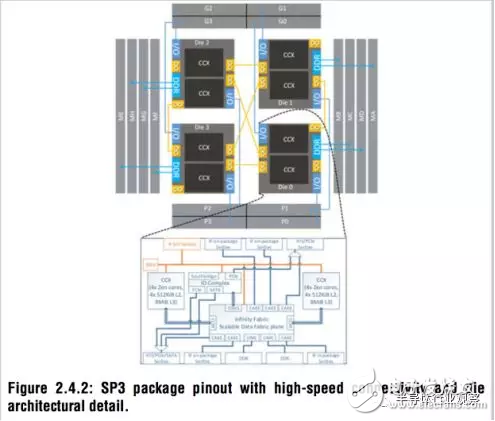

In addition, AMD also announced that it will use Infinity Fabric in 7nm VEGA GPUs. Infinity Fabric and Nvidia's NVLink are similar and different. NVLink is mainly used to accelerate data communication between multiple GPUs, while Infinity Fabric can be used for network on chip (NoC), or for interconnection in the package. Off-chip interconnection. In addition to its use in VEGA GPUs, AMD will also use Infinity Fabric with Zeppelin architecture in its CPUs. Zeppelin is a new architecture released by AMD at the ISSCC conference this year. Through advanced packaging technology and Infinity Fabric interconnection technology, multiple chips can be efficiently integrated in the package to achieve a flexible integration mode. Multiple processor chips can be integrated according to needs. Multiple different chips. In the field of advanced packaging, AMD attaches great importance to the use of HBM memory in GPUs a few years ago. With the development of Zeppelin architecture, we see that AMD is continuing to tap its potential in packaging. Of course, Intel is not behind, and its EMIB advanced packaging technology is also in a leading position. We will continue to monitor the competition among AMD, Intel and Nvidia in the field of advanced packaging.

ConclusionThe 7nm product released by AMD on Computex this time demonstrates its determination to enter the data center application, and the data center application is expected to take over the banner of the PC and become the next driving force for the computer market. In terms of chip technology, the advantages provided by the 7nm process are mainly integration and power consumption. In addition to the reduction of feature size, the improvement of performance must also rely on packaging technology.

16 Port Usb Charger,16 Port Powered Usb Hub,200W High Poer Charger,Multiple Usb Desktop Chargers

shenzhen ns-idae technology co.,ltd , https://www.szbestchargers.com